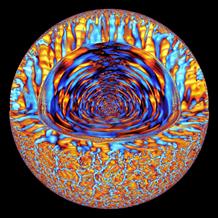

The Universe consists of a multitude of objects (planets, stars, interstellar space, galaxies, etc.) with dynamic behaviour that is often non-linear, associated with a wide range of spatial, energetic and time scales. High-performance numerical simulation (HPC) is an ideal tool for understanding how they function, using numerical approximations to resolve the complex equations of plasma dynamics coupled with processes such as compressibility, magnetism, radiation, gravitation, etc.

Data mining and efficient exploitation of data

To improve the realism of these simulations, more and more spatial or spectral resolutions (in energy or wavelength) and physical processes must be taken into account simultaneously, generating vast data sets for exploration and analysis. Astrophysicists are thus faced with problems of data mining and the efficient exploitation of data (data analytics), common to the Big Data and High Throughput Computing (HTC) communities. Today, to understand the Sun, or the evolution of galaxies, or the formation of stars, the spatial discretisation of simulated objects requires increasing resolution (cells) in 3 dimensions, with the most ambitious calculations on current petaflop computers even reaching 4,000 cells per dimension, or a total of 64 billion. In each cell, several physical fields or variables (their number increases with the amount of physical content) are monitored over a period of time and are numerically represented by “double precision” real numbers (stored on 8 bytes). Consequently, the 64 billion cells are stored on more than (64 109 * 8) ~ 500 Gb in which each variable of interest is calculated. For 8 physical variables traditionally used in astrophysics, such as density, temperature, the 3 components of speed and the magnetic field, this means ~4 Tb per instant of time. In order to provide statistically significant time averages, hundreds to thousands of these “time steps/snapshots” are necessary, which means – for a given picture of the dynamics of a celestial object – that datasets of about a petabyte must be managed. As parametric studies are often necessary, the scale of the task rapidly becomes apparent if this volume has to be multiplied by 10, 20 or more to cover the parameters space. This is all the more true as the arrival of exaflop/s computing will further reinforce this trend or even render it critical, by allowing simulations comprising more than a thousand billion grid cells.

Correlations are not that useful

To further clarify the data analytics problem for HPC simulations in astrophysics, we should also point out that correlations are not that useful. In physics as in astrophysics, if a clear physical link cannot be established between such or such a variable, a correlation is of very little interest. In addition, the physical quantities considered often have a non-local dynamic or are vectorial fields; their structure and evolution over time then imply a complex dynamic that is not easily reconstructed using traditional data mining processes.

A fresh look at current technical tools

To implement these analyses specific to astrophysics, a completely fresh look must be taken at current technical tools or even the structures of the data produced by the simulation codes. The aim is to optimise the performance of the I/O and analysis algorithms, reduce the memory footprint of the data structure and, finally, improve the energy efficiency of very high volumes of data processing both today and tomorrow. In addition, to make best use of the data from astrophysical simulations, more and more initiatives are emerging in the international community, with a view to on-line publishing in the form of open data bases not only of the scientific results but also the raw data from the calculations. They are accessible to the greatest number (astrophysicists, other scientific communities, or even the general public), thus encouraging their reutilisation by developing augmented interfaces enabling the pertinent information to be located and extracted. CEA is also on the point of launching its own database dedicated to astrophysical simulations, as part of the COAST project (COmputational ASTrophysics at Saclay).

So there is indeed a Big Data problem in HPC astrophysical simulations but it requires a specific approach, based on physical models in order to extract the subtlety of the non-linear and non-local processes present in the celestial objects and it cannot simply rely on multipoint correlations.