Big Data is considered to be both one of the greatest challenges and a magnificent opportunity in numerous scientific, technological and industrial fields. In cosmology, it could help solve the mysteries of the Universe, but also pick holes in Einstein’s theory of relativity.

But the volume of data acquired poses serious problems with calibration, archival and access as well as with scientific exploitation of the products obtained (images, spectra, catalogues, etc.). The archived data from the future Euclid space mission will contain 150 petabytes of data and the Square Kilometre Array (SKA) project will generate 2 terabytes of data per second, with 1 petabyte per day archived.

Algorithmic and computational challenges

The challenge is to analyse these datasets with algorithms capable of revealing signals with a very low noise ratio and utilising the most advanced methodologies: learning techniques, statistical tools, or concepts from harmonic analysis, recently in the spotlight with the awarding of the Abel prize to Yves Meyer (the father of the theory of wavelets).

Acquiring such algorithms is a real challenge for the teams in the coming years: their ability to do so determines the scientific returns from their engagement in the large international missions.

New scientific fields

These challenges have led to the emergence of a community of scientists from different backgrounds (astrophysics, statistics, computing, signal processing and so on). The goal is to promote methodologies, develop new algorithms, disseminate codes, use them for scientific exploitation of data and train young researchers at the crossroads between disciplines. Two organisations have recently been created, the IAA (International Astrostatistics Association) and commission 5 of the IAU (International Astronomical Union) to promote astrostatistics and astroinformatics. Astrostatistics laboratories have been set up in the United States, Great Britain (at Imperial College in London) and in France at the CEA (the CosmoStat laboratory in the Astrophysics Department) along with a computational astrophysics centre in New York in 2016.

The theory challenge

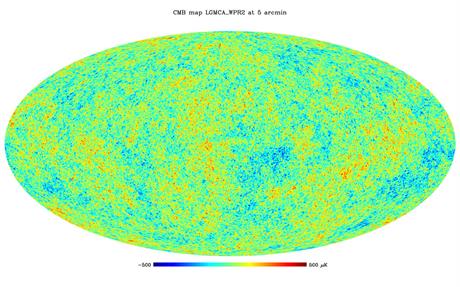

To understand the nature of dark energy and dark matter and to test Einstein’s theory of general relativity, the parameters of the standard model of cosmology must be accurately measured, using data taken with space or ground telescopes.

For a long time, errors in the estimation of cosmological parameters came from stochastic effects such as instrument noise or the cosmic variance linked to very partial coverage of the sky. Hence the use of increasingly sensitive detectors and observation of increasingly large sky fields. With the reduction in these stochastic errors, the systematic errors became increasingly significant.

The clearest example of this phenomenon was no doubt the announcement of the discovery of primordial gravitational waves in March 2014 by the American BICEP team. It was subsequently found that the signal was indeed real, but that it actually came from the dust in our galaxy. An error in modelling the emission of this dust had left a residual signal in the data.

In addition to stochastic and systematic errors, Big Data generates a new type of error, approximation errors. As it is hard to estimate certain values with the existing technology, approximations are introduced into the equations to speed up computing time or obtain an analytical solution. Managing these errors is essential in order to derive correct results but this requires a significant theoretical effort.

The reproducible research challenge

With enormous volumes of data and highly complex algorithms, it is often impossible for a researcher to reproduce the figures published in an article. However, the reproducibility of results lies at the very heart of the scientific approach and constitutes one of the major problems of modern science. Hence the principle which consists in publishing the source codes operated to analyse the data and the scripts used to process the data and generate the figures, in addition to the results. This now crucial principle is rigorously applied by the CosmoStat laboratory at CEA.